Deploying Airflow on AWS for Large Scale

We were seeking a scalable, enterprise solution to host our Airflow platform.

We were seeking a scalable, enterprise solution to host our Airflow platform.

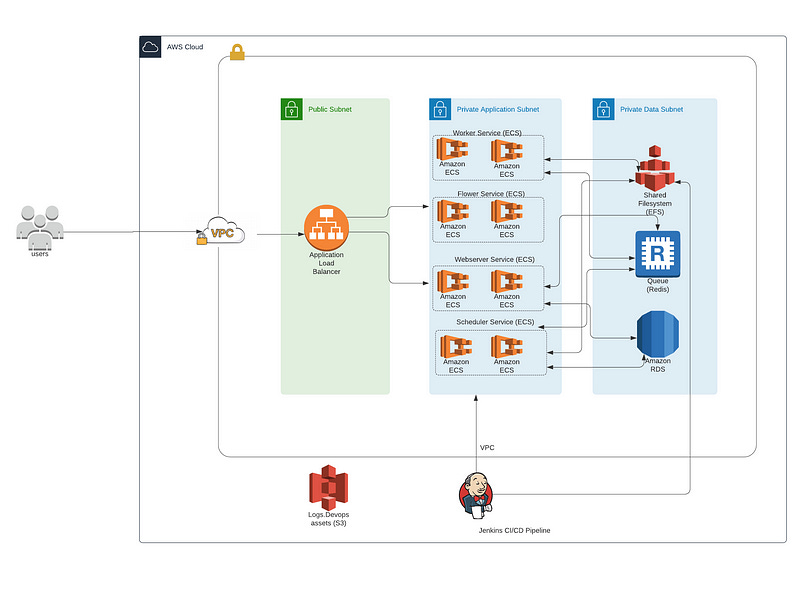

By leveraging Amazon Web Services’ (AWS) leading infrastructure and compute platform service, a scalable, redundant, fault-tolerant solution can be readily provisioned. Primary factors in the design of the solution include multi-environment deployment and management, reliability, security, ease of administration and operating cost.

Scope of Solution

This scope of this solution includes the provisioning of resources and services utilized across all environments (ie: “Terraform remote state”) as well as the initial configuration of a single environment for development purposes (ie: “dev environment”).

There will be configuration and adjustment to the environment after completion of the initial configuration.

Further, additional environments can be provisioned as needed (ie: “staging”, “pre-prod”, “prod”), also handled separately.

SOLUTION COMPONENTS

Tenancy, Environment and Account Model

Tenancy — the solution to serve a single tenant (Sequoia) and is to be composed of the application deployed within an environment which includes its own VPC, routes, subnets, security and other AWS resources.

A best practice three-tier network security model will be used to segment access of/to resources across the following subnets:

public,

private

data.

Environments — The solution will be deployed multiple times into separate environments for the purposes of various stages of the development lifecycle. These environments align with the following code promotion path :

dev/staging => pre-prod => prod

Accounts — The solution will be spread across multiple AWS accounts for governance and management purposes. The production account will host the pre-prod and prod environments, while the non-production account will host the dev and staging environments.

Infrastructure-as-Code — Terraform

This solution will be created using following Infrastructure-as-Code principles. Terraform (https://www.terraform.io/) is a tool for building, changing, and versioning infrastructure safely and efficiently.

By creating Terraform manifests for this solution, automation can be implemented for an environment to be managed, updated, and tracked using source control.

Additionally, the configuration manifests can be reused to deploy the environment multiple times, allowing for reduction of effort and consistency across environments.

Each environment will be designed for fault-tolerance and autoscaling to ensure uptime and reliability.

Additionally, each environment will have its own custom scaling parameters to ensure cost optimisation.

CI/CD Pipelines

Continuous Integration, Continuous Delivery, Continuous Deployment pipelines automate the building, testing and deployment of software solutions.

Jenkins (https://jenkins.io/) is an open source automation server which enables developers around the world to reliably build, test, and deploy their software.

The solution contains Jenkins pipelines for the building and deployment of each containerized component of the solution for each of the ECS services listed below, as well as a Jenkins pipeline for DAGs.

Application Architecture

The Airflow (https://airflow.apache.org/) application consists of multiple components.

Many of these components will be containerized and deployed within AWS’s Elastic Container Service (ECS) using Fargate, which facilitates cost-savings and simplicity of management with automated scaling of ECS Services.

The following components will be deployed as ECS Tasks to their own ECS Services:

Airflow Webserver

Flower for Celery

Airflow Worker

Airflow Scheduler

In Airflow, a DAG — or a Directed Acyclic Graph — is a collection of all the tasks that should be run, organized in a way that reflects their relationships and dependencies.

Deployment of updates to DAGs will be automated, using commits to source control repositories which trigger CI/CD pipelines to test and deploy the changes.

A common deployment scenario is a scripted update to a shared filesystem (such as Elastic File System, EFS) location that is monitored by and synchronized with the Airflow application.

For example, a commit to a github repo would cause a pipeline to be initiated in Jenkins. The pipeline would execute a script that synchronizes the content of the github branch with the shared filesystem, resulting in the Airflow application being updated with the changes.

Shared configuration will be stored in a redundant, fault-tolerant database using RDS MySQL. Additionally, the queue of tasks will be backed by a Redis database.

Lastly, the website components of the application will be served through an Application Load Balancer (ALB) to facilitate running multiple copies of the website components to achieve fault-tolerance.